Compare commits

3 commits

a2ceb6d535

...

31794b6e31

| Author | SHA1 | Date | |

|---|---|---|---|

| 31794b6e31 | |||

| 7d1199c5db | |||

| 240c1d5eee |

38 changed files with 1078 additions and 1 deletions

|

|

@ -6,4 +6,5 @@ This will eventually replace my existing WordPress blog at https://bune.city.

|

|||

The website itself is licensed under the AGPLv3, but:

|

||||

- All files in the `_posts` and `_drafts` folders are instead licensed under CC-BY-SA 4.0.

|

||||

- The "Liberation" fonts (`/assets/fonts/Liberation*`) are licensed under the SIL Open Font License.

|

||||

- The "Material Icons" webfont file is licensed under the Apache 2 license.

|

||||

- The "Material Icons" webfont file is licensed under the Apache 2 license.

|

||||

- Everything inside `/assets/plyr` is licensed under the MIT ("Expat") license.

|

||||

|

|

@ -0,0 +1,173 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: What do the various graphics settings in PC games mean?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

This is going to be a fair bit longer than the average Lynne Teaches Tech post, due to the sheer amount of info that needs to be covered.

|

||||

|

||||

Most PC games include a smattering of graphical options to configure, ranging from the obvious, like brightness and resolution, to more confusing ones, such as chromatic aberration and ambient occlusion. This post will attempt to explain as many as possible, and may occasionally be updated to add more. Feel free to leave a comment if I missed one!

|

||||

|

||||

<!--more-->

|

||||

This article is structured so that the easier to understand sections come first, with the more complicated stuff later on. There's also a table of contents provided so you can jump to a particular section if you're using this post as a reference, or if you just want to know about one thing in particular.

|

||||

|

||||

*While some people use the term PC to refer exclusively to Windows computers, in this post, it will mean any operating system aimed at traditional computers, such as Windows, macOS, and Linux.*

|

||||

|

||||

Display

|

||||

-------

|

||||

|

||||

This section refers to settings that effect how the image is displayed on the screen.

|

||||

|

||||

### Resolution

|

||||

|

||||

Every display has a resolution. Your computer outputs video at some resolution, usually the same one as your display to ensure that everything looks as best as it can.

|

||||

|

||||

Higher resolutions mean that your display needs to create more information. It's a lot simpler to render 1,000 pixels than it is to render 10,000, because the graphics card doesn't need to create as much information. One common display resolution is 1920 pixels wide by 1080 pixels high, or 1920x1080 (also known as 1080p). At a resolution of 1920x1080, your graphics card needs to generate 2,073,600 pixels, or 2.07 megapixels, of information. Meanwhile, 1280x720, another common standard, is only 0.92 megapixels, and is therefore much easier for a computer to process. 4K, or 3840x2160\[efn\_note\]This is the "standard" 4K resolution, but there are others\[/efn\_note\], is a massive 8.29 megapixels.

|

||||

|

||||

*on* [*Unsplash*](https://unsplash.com)](https://wasabi.lynnesbian.space/bune-city/2019/05/a4a48eae19d6ecf4f5a01eed7471182d/comparison.jpg){.wp-image-337}

|

||||

|

||||

Higher resolutions look better, but come at the cost of performance. If your computer's really struggling to play a game, you might want to consider turning this down, but be aware that things will look noticeably blurrier, and you'll get a lot less detail.

|

||||

|

||||

#### Internal Resolution

|

||||

|

||||

Some games offer a setting to change the internal resolution as well as the resolution. This might seem a little confusing, but it's actually quite simple.

|

||||

|

||||

Suppose you have a 1080p display, but you can't handle running a game at that resolution, so you turn it down to 720p. Everything becomes less detailed, including the in-game menus and overlays. Internal resolution fixes that: It allows you to set the game's graphics to run at a lower resolution, while the menus remain at full resolution. This means that you get crisp subtitles, buttons, health meters, and more, while still running the game at a lower resolution. If a game offers you the option to change the internal resolution, you'll want to do this instead of the standard resolution setting. It offers nearly zero performance penalty for a great benefit.

|

||||

|

||||

### Brightness, Contrast, Saturation, and Gamma

|

||||

|

||||

These three are pretty simple, so I won't spend too long on them.

|

||||

|

||||

Brightness is, as the name suggests, how bright the image is. Turning up the brightness makes everything brighter, from the shadows to the lights.

|

||||

|

||||

Contrast is the difference between black and white. A low contrast means the range of brightness is squished together, with less dark blacks and less bright whites. Having this too low makes everything look terrible, but having it too high makes it look like the brightness is exaggerated.

|

||||

|

||||

Saturation is how vibrant the colours are. High saturation makes colours "pop" more, but also makes things look less realistic.

|

||||

|

||||

Gamma is \[TODO\] https://gaming.stackexchange.com/a/80986

|

||||

|

||||

### Fullscreen, Windowed, and Borderless Windowed Mode

|

||||

|

||||

Fullscreen programs take direct control of your computer's display space. If a fullscreen game is running at a different resolution than your operating system, then it will tell it to change the resolution to match the game. This can occasionally cause problems with using alt-tab if the game isn't built to handle being in the background, and if the game changes the resolution, alt-tabbing will sometimes mean changing the resolution back to the previously set one.

|

||||

|

||||

Windowed mode means that the game runs in a window, just like pretty much every other program.

|

||||

|

||||

Borderless windowed mode is a special setting that is sort of a mix between the two. The game will run in a window without the usual window decorations (such as the title bar and close button), and will usually take up the entire screen. This means that you can easily alt-tab between programs and get notifications, while also taking up the entire screen and not wasting space with close buttons and such nonsense.

|

||||

|

||||

### VSync

|

||||

|

||||

Every display has a refresh rate. This is the number of times it can update the screen per second. Most monitors typically have a refresh rate of 60Hz, meaning they can update the screen 60 times per second. A 60Hz monitor is therefore perfectly suited for playing games at 60FPS, or 60 frames per second. It also works well for 30FPS content, as 60 is cleanly divisible by 30, and the monitor can just display each frame twice.

|

||||

|

||||

However, games don't usually run at a perfectly stable 60FPS all the time. When things get more graphically intense, your computer might not be able to run the game at the full 60FPS, and might drop to, say, 50. Your 60Hz monitor can't display 50FPS content nicely, because they aren't evenly divisible. The game might also run at a *higher* framerate than the monitor can handle if your PC can handle it, and 70FPS content won't look good on a 60Hz monitor either.

|

||||

|

||||

To make this more mathematically simple, let's imagine a 3Hz monitor playing 2FPS footage. The monitor is ready to display a new frame 3 times per second, but there's only a new frame to display 2 times per second. The monitor can't "wait" for the frame to be ready, it always runs at the same speed. So when the monitor tries to display the frame, the graphics card hasn't finished rendering it yet. This means that it only displays some of the frame. This leads to screen tearing.

|

||||

|

||||

The same thing happens if the game is running too fast. If a 3Hz monitor tries to display 4FPS footage, by the time it updates, the graphics card has already started rendering a new frame, and the monitor displays some of the new frame over the top of the previous one.

|

||||

|

||||

Let's say that the monitor and game are running at a rate where the first frame displays perfectly, but when the monitor tries to show the second frame, it's only half-finished. This would mean that the top half of the second frame appears on the monitor, but the bottom half of the first frame is still there. Since video games don't tend to have massive changes between each frame, rather than looking like two completely different images overlapping each other, it will tend to look like one image with a "tear" in the middle.

|

||||

|

||||

*\],* [*via Wikimedia Commons*](https://commons.wikimedia.org/wiki/File:Tearing_(simulated).jpg)](https://wasabi.lynnesbian.space/bune-city/2019/05/ca88ba8a6abbeb74a968288e0b1ae8c8/Tearing_simulated-1024x770.jpg){.wp-image-343}

|

||||

|

||||

Of course, if there *has* been a huge change between frames (a cutscene with a jumpcut, pausing the game...), it will look even more out of place.

|

||||

|

||||

If your monitor runs at 60Hz, each tear will only be visible for 1/60th of a second. However, since the output will most likely tear every single frame, there will be a new tear somewhere else every time the monitor refreshes. This can be very distracting. VSync can deal with visual tearing in multiple ways:

|

||||

|

||||

#### Double Buffering

|

||||

|

||||

The graphics card will keep the old frame in memory, and whenever the monitor asks for an update, it will send the old frame. Meanwhile, it will render the new frame. When the graphics card is finished with the new frame, it will wait for the monitor to ask for an update, and send the new frame. It will then store the new frame as the old frame, and start working on the next new frame. As a side effect, this means that the game will be limited to a framerate cleanly divisible by your monitor's refresh rate. For example, if you have a 60FPS monitor, the game will only be able to display at 60FPS, 30FPS, 15FPS, and so on - numbers cleanly divisible by 60.

|

||||

|

||||

#### Triple Buffering

|

||||

|

||||

Triple buffering works similarly to double buffering, but works in a way independent of the monitor's refresh rate. It never needs to wait for the monitor to refresh, meaning that the game isn't limited to a divisor of 60Hz (or whatever your monitor's refresh rate is). The way this is achieved is a little more technical.

|

||||

|

||||

As the name suggests, triple buffering makes use of three buffers, areas of memory where the computer is able to store completed frames\[efn\_note\]A buffer is an area in memory where things are temporarily stored while other stuff is going on in the background. It isn't exclusive to VSync.\[/efn\_note\]. Triple buffering uses one "front" buffer and two "back" buffers to store the video. Whenever the display needs an update, it asks the front buffer for it. Meanwhile, the graphics card can render the new frame to one of the back buffers, where it can then be copied into the front buffer. You can read about this in more detail [here](https://en.wikipedia.org/wiki/Multiple_buffering#Triple_buffering).

|

||||

|

||||

#### Adaptive VSync

|

||||

|

||||

Adaptive VSync enables VSync when the framerate exceeds your monitor's refresh rate, and disables it otherwise. This is a "best of both worlds" approach that means you don't get tearing when the game is running too fast, but you don't get locked to a divisor of your monitor's refresh rate when it's running too slow. This means that you'll still experience tearing when the game is running at a lower framerate than your monitor's refresh rate, but most people would rather have tearing at 50FPS than a game running at only 30FPS.

|

||||

|

||||

Adaptive VSync is a feature implemented by both AMD and Nvidia GPUs. Nvidia has an article about it [here](https://www.geforce.com/hardware/technology/adaptive-vsync/technology).

|

||||

|

||||

#### FreeSync/GSync

|

||||

|

||||

These methods provide the best possible solution to the problem by approaching it from the other side: Instead of changing the GPU's framerate, they change the monitor's refresh rate to adapt to the video signal, eliminating tearing without requiring framerate changes or memory buffers. This requires that you have a GPU *and* a monitor that are compatible with the technology.

|

||||

|

||||

Effects

|

||||

-------

|

||||

|

||||

We'll now turn our attention to settings that provide different visual effects.

|

||||

|

||||

### Bloom

|

||||

|

||||

Bloom is one of many visual effects in modern video games designed to emulate the behaviour of a camera. While this doesn't make much sense in most first person games (if you're looking through the eyes of a living creature, why do your "eyes" behave like cameras?), it is commonly seen in a lot of video games.

|

||||

|

||||

Bloom replicates the way that cameras show light "bleeding" from bright objects to their darker surroundings.

|

||||

|

||||

*\],* [*via Wikimedia Commons*](https://commons.wikimedia.org/wiki/File:HDRI-Example.jpg)](https://wasabi.lynnesbian.space/bune-city/2019/05/b8532efc51206d8495cba65aca303372/bloom.jpg){.wp-image-346 width="384" height="239"}

|

||||

|

||||

Bloom typically incurs little to no performance impact, as it requires very little processing power.

|

||||

|

||||

Nvidia has a comparison page where you can see the difference between bloom being enabled and disabled on a screenshot of the game *Tom Clancy's Rainbow Six Siege* [here](https://images.nvidia.com/geforce-com/international/comparisons/tom-clancys-rainbow-six-siege/tom-clancys-rainbow-six-siege-lens-effects-interactive-comparison-001-bloom-vs-off.html).

|

||||

|

||||

### Lens Flare

|

||||

|

||||

](https://wasabi.lynnesbian.space/bune-city/2019/05/967d9583d1a0e40409814bcbd4faf41f/lens-flare-1024x576.jpg){.wp-image-347}

|

||||

|

||||

Lens flare is another effect that aims to simulate the behaviour of cameras. It has very little performance penalty, although some may find it obnoxious or unrealistic.

|

||||

|

||||

### Chromatic Aberration

|

||||

|

||||

Chromatic aberration occurs when a camera lens fails to line up the red, green, and blue channels that make up an image.

|

||||

|

||||

*\],* [*via Wikimedia Commons*](https://commons.wikimedia.org/wiki/File:Nearsighted_color_fringing_-9.5_diopter_-_Canon_PowerShot_A640_thru_glasses_-_closeup_detail.jpg)](https://wasabi.lynnesbian.space/bune-city/2019/05/7d0a8f8e80c500f4b16c58ace0358455/CA-1024x819.jpg){.wp-image-349}

|

||||

|

||||

Again, not much performance impact with this one. Whether or not you enable it is a matter of taste.

|

||||

|

||||

### Motion Blur

|

||||

|

||||

This one applies to eyes, too! When you move something very quickly, you'll notice it looks kinda blurry. Motion blur attempts to recreate this by adding a blur whenever you move the in-game camera quickly. This is another "matter of taste" option.

|

||||

|

||||

Performance

|

||||

-----------

|

||||

|

||||

This section covers settings that are there for performance reasons.

|

||||

|

||||

### Models (or Model Quality)

|

||||

|

||||

3D objects in computer graphics are made up of polygons, typically triangles (also called "tris", pronounced tries) or rectangles ("quads"). You can create any 3D object out of these polygons. A ball made up of only 50 polygons wouldn't look very good, but a 500 polygon ball would look a lot better. However, this comes at a performance cost. Models with more polygons look better and less "computery", but require more graphics processing power.

|

||||

|

||||

![A simple model of a car made up of quads.

|

||||

*Pauljs75 at English Wikibooks \[Public domain\],* [*via Wikimedia Commons*](https://commons.wikimedia.org/wiki/File:W3D_all_quad_car_Step33.jpg)](https://upload.wikimedia.org/wikipedia/commons/5/51/W3D_all_quad_car_Step33.jpg)

|

||||

|

||||

A computer has to render more than just the polygons themselves. It also needs to apply lighting, shading, and textures to them, which makes high-poly model processing very intense.

|

||||

|

||||

### Textures

|

||||

|

||||

Textures are the images that are applied to the models. For example, you might have a model of a tree, and then an image that is applied to the model to make it look like a tree. Without a texture, a model looks like the car above: A single uniform colour, possibly with lighting and shading applied\[efn\_note\]It's also possible to set the colours of polygons individually, either with an algorithm or a preset list of colours, but we won't go into that here.\[/efn\_note\].

|

||||

|

||||

Higher resolutions textures look better, but require more VRAM (video memory) and processing power.

|

||||

|

||||

![A demonstration of how a texture image is mapped onto a 3D model.

|

||||

*Drummyfish \[CC0\],* [*via Wikimedia Commons*](https://commons.wikimedia.org/wiki/File:Texture_mapping_demonstration_animation.gif)](https://wasabi.lynnesbian.space/bune-city/2019/05/02469efe16c9d6d48da0ff91e4a3580c/Texture_mapping_demonstration_animation.gif){.wp-image-350}

|

||||

|

||||

### Bilinear, Trilinear, and Anisotropic Filtering

|

||||

|

||||

These are all methods of rendering textures that aren't angled parallel to the camera. A common example is the ground stretching off into the distance. Bilinear and trilinear filtering are much less resource intensive than anisotropic filtering.

|

||||

|

||||

\], [via Wikimedia Commons](https://commons.wikimedia.org/wiki/File:Anisotropic_filtering_en.png)](https://wasabi.lynnesbian.space/bune-city/2019/05/b06fb8f6d7b233f44d9aeb31b596e641/anisotropic-1024x484.jpg){.wp-image-351}

|

||||

|

||||

### Level of Detail

|

||||

|

||||

To save processing power, games will often replace distant textures and models with lower quality ones. If done well enough, this can be almost imperceptible. Games typically give you a slider to choose how aggressive you want the effect to be, with the minimum setting only effecting extremely distant objects (or sometimes disabling LOD altogether), and the highest setting meaning that models and textures are replaced with lower quality versions very quickly.

|

||||

|

||||

Summary

|

||||

-------

|

||||

|

||||

This article isn't finished yet! Thanks for reading what I've written so far, though! :mlem:

|

||||

|

||||

|

|

@ -0,0 +1,15 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: How do mstdn-ebooks bots work?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

the ebooks bots (the ones i using [the code i made](https://github.com/Lynnesbian/mstdn-ebooks), anyway) use something called [markov chains](https://en.wikipedia.org/wiki/Markov_chain). markov chains are a mathematical concept, and in computer science (computers are the only thing i know about), they are generally used for predictive text. when you type "how are" on your phone and the keyboard recommends "you", that's (usually) markov chains at work! as you type, your keyboard makes a database of everything you've ever typed and uses it to predict what you'll type next. keyboard generally come with a built-in database of common phrases, and then additionally learn from you as you type. this is why your brand new phone already knows to suggest "you" after "how are", before you've ever even said that before.

|

||||

|

||||

mstdn-ebooks use markov chains too! every time they're asked to post, they use a random selection of 10,000 posts (they use less if you haven't made that many) to learn from and use them to generate a new one. that means when you reply, they receive your reply, randomly select a huge chunk of posts, train a markov chain on them, generate a reply, and post it -- all in less than a second! technology!!

|

||||

|

||||

a markov chain isn't intelligent. it doesn't know where sentences start and end, and it doesn't understand grammar -- it doesn't know anything apart from "when you say this word, you usually say this one next". markov chains don't care if the input is words or colours or images or DNA sequences, they just have a database of items and probabilities and use them to create posts. they often make things that don't make sense, or just feel like two posts slammed together, but occasionally, they come up with gold (such as "[have these ancap nerds even played Super Mario 64](https://fedi.lynnesbian.space/web/statuses/100987016408210808)").

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/web/statuses/101548793919202772)

|

||||

|

||||

|

|

@ -0,0 +1,17 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: How does OCRbot work?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

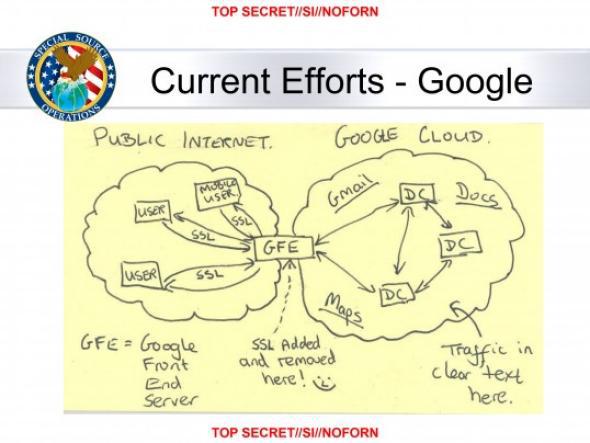

after downloading the image, [OCRbot](https://github.com/Lynnesbian/OCRbot) uses [tesseract-ocr](https://github.com/tesseract-ocr/tesseract) to extract the text. this is a free (as in libre and gratis) program that uses neural networks to do the job. it's been trained on a massive dataset of text and has "learned" what letters usually look like. it knows that a lowercase h is a line with a smaller line connected to it on the right, for example.

|

||||

|

||||

tesseract first tries to split the image into chunks of what it believes to be text. it then splits these chunks into words by looking for spaces, and then tries to identify the letters making up those words. if any of these steps fail, the entire process fails.

|

||||

|

||||

neural networks work in a similar way to how a brain does, but on a much smaller scale - computers really aren't up to the task of simulating an entire brain right now. powerful computers are used to train the neural network for hours and hours, making it become more accurate at reading text. this has some drawbacks - if it was always trained on helvetica text, it'd only be able to read that, for example. thus it's important to make sure you train it on lots of different fonts. tesseract provides these datasets for you, but you can train your own if you'd like.

|

||||

|

||||

finally, after extracting the text, OCRbot does some rudimentary fixes (like replacing \| with I, as tesseract thinks \| is a lot more frequent than it really is) and posts the reply.

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/web/statuses/101758028785197240)

|

||||

|

||||

|

|

@ -0,0 +1,18 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: The DK64 Memory Leak"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

Donkey Kong 64 was about to be released when the developers discovered that there was a memory leak that caused the game to crash after a few minutes. There was no time to fix it, so what they did instead was require the game to be played with the expansion pak, which doubled the N64's RAM. This meant that the game would only crash after \~10 hours rather than a few minutes.

|

||||

|

||||

Computers have two main types of storage: the hard drive (or solid state drive), which is where you keep your documents, videos, etc., and memory (or RAM), which is where the computer stores things it's currently "thinking about". If you have a document open in powerpoint, it's in memory. Memory is a LOT faster than the hard drive, so it's preferable to use it when possible.

|

||||

|

||||

A memory leak is when you keep putting things in memory without taking them out, causing the amount of memory used to slowly go up and up until the computer runs out of memory and crashes. This is what happened with DK64.

|

||||

|

||||

The expansion pak added more memory to the Nintendo 64 (the system DK64 runs on), which meant that running out of memory would take a lot longer (in this case, ten hours instead of a few minutes). It's not a fix, but it was good enough.

|

||||

|

||||

[View original post

|

||||

](https://fedi.lynnesbian.space/web/statuses/101514903220023730)

|

||||

|

||||

|

|

@ -0,0 +1,29 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: What do all the parts of a URL or hyperlink mean?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

when you access a website in your browser, its URL (Universal Resource Location) will almost always start with either https:// or http://. this is known as the schema, and tells the browser what type of connection it's going to be using, and how it needs to talk to the server. these aren't the only schemas - another common one is ftp, which is natively supported by most browsers. try opening this link: <ftp://ftp.iinet.net.au/pub/>

|

||||

|

||||

a hyperlink (or just link) is a name for a clickable or otherwise interactive way to access a URL, typically represented by blue underlined text. clicking a link opens a URL and not the other way around.

|

||||

|

||||

the next part of a URL is the domain name, which may be preceded by one or more subdomains. for example:

|

||||

google.com - no subdomain

|

||||

docs.google.com - subdomain "docs"

|

||||

|

||||

a common subdomain is "www", for example, www.google.com. using www isn't necessary and contains no special meaning, but many websites use or used it to indicate that the subdomain was intended to be accessed by a web browser. for example, typical webpages could be at www.example.com, with FTP files stored at ftp.example.com.

|

||||

|

||||

the ".com" at the end of a URL is a TLD, or Top Level Domain. these carry no special meaning to the computer, but are used to indicate to the user what type of website they're accessing. government websites use .gov, while university sites use .edu. you can have multiple TLDs - for example, <https://australia.gov.au>

|

||||

|

||||

in "example.com/one/two", "/one/two" is the path. this is the path to the file or page you want to access, similarly to how the path to your user folder on your computer might be "C:\\Users\\Person".

|

||||

|

||||

some URLs end with a question mark and some other things, like "example.com?page=welcome". this is the query portion of the URL, which can be used to give the server some instructions. what this means varies from site to site.

|

||||

|

||||

finally, some URLs might end with a hash followed by a word, like example.com\#content. this is called a fragment or anchor, and tells the browser to scroll to a certain part on the page.

|

||||

|

||||

technically, a URL is only a URL if it includes the schema. this means that <https://example.com> is a URL, but example.com isn't - it's a URI, the I standing for Identifier. however, people will understand what you mean if you use them interchangeably.

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/@lynnesbian/101898501800196300)

|

||||

|

||||

|

|

@ -0,0 +1,14 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: What does \"decentralised\" mean?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

Let's use twitter and mastodon for our examples.

|

||||

Twitter is centralised, while Mastodon is not. This means that it's possible for Twitter to disappear at any time - the CEO could (theoretically) shut it down and delete anything at a moment's notice. The company behind twitter has complete control over everything that shows up on Twitter, and can do whatever they please with it.

|

||||

|

||||

Even if Mastodon's creator decided to shut down mastodon.social, all of the other instances would work fine. Mastodon can't be blocked by the government or your school (individual instances can, but there's always more). Mastodon can't be shut down. Mastodon can't disappear. This is true of all decentralised software, from matrix.org to Pleroma to email. It's a lot harder for Mastodon or email to disappear than it is for Twitter or Tumblr to do the same.

|

||||

|

||||

[View original post](https://fedi.lynnesbian.space/web/statuses/101674915458010485)

|

||||

|

||||

|

|

@ -0,0 +1,17 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: What does HTTPS mean?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

when you see a website that's HTTPS rather than HTTP, it means the connection is secure. most popular browsers will display a green padlock in the URL bar to symbolise that (and colour it yellow or red if something's wrong).

|

||||

|

||||

to verify that a connection is secure (and not just someone saying it's secure), you need a certificate, a file that verifies that you are who you say you are.

|

||||

|

||||

a certificate can be revoked at any time by anyone. you can deny facebook's certificate if you like, and facebook will stop loading for you. more importantly (and practically), the issuer of the certificate can deny it, and the site will stop working until they get a new one. this means that if facebook "goes rogue", the CA (certificate authority) is allowed to remove their certificate, guaranteeing (in theory) that if the site is HTTPS, it's definitely secure.

|

||||

|

||||

these certificates don't last forever. they need to be renewed, to prove that you're still there and still complying with them. as an example, gargron, the admin of mastodon.social, had certificate auto-renewal set up, which means the certificate will automatically get renewed when it's close to expiring. so why did the cert expire? why did mastodon.social go down? the answer is because while a new certificate was installed, it wasn't actually loaded. nginx, the server software that .social uses, was supposed to automatically load the new cert, but it didn't for some reason (computers are weird!), and thus .social went offline for about an hour.

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/web/statuses/101542386081739342)

|

||||

|

||||

|

|

@ -0,0 +1,28 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: What is \"free software\"? Why do people say software isn't free, even though you can download it for free from the app store?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

There are a lot of ways you could define "free software". For example, you could say Candy Crush or CCleaner is free software, because you can install it for free.

|

||||

|

||||

When people say that software is "free as in freedom", or "libre", they mean something else. Under these definitions, neither Candy Crush nor CCleaner would be free software. In order to be free by these definitions, software generally needs to fulfil these four criteria:

|

||||

|

||||

- The ability to run the software for any reason, without restrictions. This means that the free version of TeamViewer is not libre, as it tells you that you must purchase a license to use it commercially.

|

||||

- Being able to study and modify the program's inner workings. This requires the source code being available - just as you can't make your own version of a store-bought cake without the recipe (or some clever reverse engineering), it's necessary to have the source code readily available to easily modify a program. Software that doesn't provide the source code thus cannot fulfil this term, and software like Snapchat, which bans users for running modified versions, is definitely not one of these.

|

||||

- Being allowed to redistribute the software. If you buy a MacBooks, you can install updates for free, but you certainly aren't allowed to redistribute these updates.

|

||||

- Being allowed to distribute modified versions to others. If you're not allowed to download the app, make some changes, and send that to people, it breaks this rule. The YouTube app is free, but Google doesn't allow users to make their own changes to it and distribute it to others.

|

||||

|

||||

All of the software mentioned in those four basic rules is "free", but not free. All of the software mentioned goes against every one of the four freedoms. The distinction between "freely available" and "free to do as you please with" is often signified by saying "gratis" or "libre" - gratis software is free as in "free donuts", but libre software is free as in "freedom".

|

||||

|

||||

As with anything, it's hard to make clear cut rules to define what is and isn't an example of libre software. The [Cooperative Software License](https://lynnesbian.space/csl) (CSL) prohibits most companies from using the software, but is otherwise entirely libre. This violates the first rule above, because certain people aren't allowed to use the software, but I would say it's still a free software license (just a less permissive one), although many - including the Free Software Foundation - would disagree with me on that.

|

||||

|

||||

While the Free Software Foundation argues that the CSL is not free because of its restrictions on who can use it, they also argue that obfuscated JavaScript is not free because even though the source code is available, it has been intentionally made much harder to read and modify. Going back to our cake example for earlier, this is like if your storebought cake came with a recipe, but it was written in hard to read cursive handwriting and all the ingredients had been replaced with generic names, like "ingredient 6" and "ingredient 4". It's still technically a recipe, and if you can figure out what ingredients are being referred to, it'll still work, but the FSF would argue that that doesn't count. For a computer, obfuscated source code and non-obfuscated source code are the same thing - a computer doesn't care whether you call a button a pickle, it'll still understand that if the user clicks on the pickle, something needs to happen. But for a human, obfuscated code is much harder to read than plain code, and thus the FSF considers it different.

|

||||

|

||||

And finally, you might be interested in the Free Software Foundation's [article](https://www.gnu.org/philosophy/free-sw.en.html) on what is and isn't free software. This is where those four rules came from - the FSF calls them the four essential freedoms. It's worth noting that they again outline here that "Obfuscated 'source code' is not real source code and does not count as source code."

|

||||

|

||||

Neither of these links are necessarily an endorsement of the content contained within. Although the CSL page *is* hosted on my own server, which is an endorsement anyway.

|

||||

|

||||

[View original post](https://fedi.lynnesbian.space/@lynnesbian/101758159647256687)

|

||||

|

||||

|

|

@ -0,0 +1,17 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: what is the \"analogue loophole\"?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

the "analogue loophole" (or the analog loophole) refers to the idea that no matter how hard you try to make sure nobody can make illegal copies of videos, music, or text, there's always a "hole" in the protection that occurs when it's no longer digital. digital content can be protected - video files can be encrypted, music players can limit you to five devices - but analogue signals can't be. for example, itunes can disallow you from putting a song on more than five computers, but if you play the song through your speakers and record it, what you do with it is beyond apple's control.

|

||||

|

||||

one of the few remaining analogue outputs on a modern device is the audio jack. this is where you connect your headphones or speakers. your laptop only knows that something's plugged in. it doesn't know what's connected. you could be playing it through earphones or a massive speaker system and it wouldn't be able to tell. meanwhile, HDMI is a digital output format. it can tell if it's been plugged into a TV or a recording device, and can automatically disable output if you're recording it to make it harder for you to make copies of movies.

|

||||

|

||||

if the audio jack is replaced by USB-C or bluetooth, it becomes possible to tell what you're connecting to the laptop or phone. your phone might disable audio playback on anything but headphones to prevent you from hosting a public event with the music. it could also detect a recording device that you're using to (for example) make a copy of a song you're listening to on spotify and turn off the playback.

|

||||

|

||||

of course, at some point, digital needs to become analogue. humans can't watch electrical pulses, we need to see patterns of light. we can't listen to streams of 1s and 0s, we need vibrations in the air. this means that it's impossible to truly defeat the analogue hole - no matter what you do, someone can always just point a camera at their TV. it'll have worse quality, but it can never be stopped.

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/web/statuses/101758262714214719)

|

||||

|

||||

|

|

@ -0,0 +1,39 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: What's an integer overflow?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

most people count using a decimal system. the lowest digit is 0, followed by 1, 2, and so on, through to 9. when you're counting up and you reach nine, you need to add another digit. there's no way to express ten with only one digit, so you use two digits to write 10.

|

||||

|

||||

computers use a binary number system instead. the lowest digit is 0, followed by 1, and that's it! so when you count to one, you need to add another digit to get to two, which is written as 10 in binary.

|

||||

|

||||

in decimal, from left to right, the digits mean ones, tens, hundreds, thousands, ten thousands... this means that a 4 in the third position (followed by two zeroes) means four hundred. from left to right, binary digits mean ones, twos, fours, eights... a 1 in the fourth position means eight, which is written as 1000. decimal uses powers of 10, binary uses powers of 2.

|

||||

|

||||

let's say you can only write two digits on a piece of paper. you can easily write numbers like 12 and 8 and 74, but what about 100? there's nothing you can do. but let's assume you aren't aware of that, and you're a computer following a simple algorithm calculating 99+1. first, you increment the least significant digits, which is the leftmost one. this leaves you with 90, and you need to carry the one. so you increment the other nine, and carry the one, leaving you with 00. normally, you'd just write the one you've been carrying and end up with 100, which is the correct answer. however, you only have space for two digits, so you can't continue. thus, you end up saying that 99 plus 1 equals 0.

|

||||

|

||||

an eight bit number has room for eight binary digits. this means that if the computer is at 11111111 (255 in decimal) and tries to add 1 again, it ends up with zero. this is called an integer overflow error - the one that the computer has been carrying has "overflowed" and spilled out, and is now lost. the number has wrapped around from 255 to 0, as if the numbers were on a loop of paper. underflow is the opposite of this problem - zero minus one is 11111111.

|

||||

|

||||

so if adding to the highest number possible should create zero, why does it sometimes give a negative number instead? this is due to signed integers. an unsigned integer looks like this:

|

||||

7279

|

||||

a signed integer looks like +628 or -216. a computer doesn't have anywhere special to put that negative (or positive) sign, so it has to use one of the bits in the number. 1111 might mean -111, for example.

|

||||

|

||||

(n.b. the method of signing integers described below is "offset binary". there are other methods of doing this as well, but we'll focus on this one because it's intuitive.)

|

||||

|

||||

if we want to represent negative numbers, we can't start at zero, because we need to be able to go lower than that. in binary, there are sixteen different possible combinations of four digits/bits, from 0000 to 1111 - zero to fifteen. instead of treating 0000 as zero, we can move zero to the halfway point between 0000 and 1111. since there are sixteen positions between these two numbers, there's no middle. (the middle between one and three is two, but there's no whole number middle between one and four.) we'll settle for choosing 1000 to be our zero, which means there are eight numbers below zero and seven numbers above it. if we treat zero as positive, we have eight negative and eight positive numbers to work with. our number range has now gone from 0 to 15, to -8 to 7. we can't count as high, but we can count lower.

|

||||

|

||||

in such a system, 1111 would be 7 instead of 15, just as 1000 is 0 instead of 8. when adding one to 1111, it overflows to 0000, which means -8 with our system. this is why adding to a high, positive number can produce a low, negative number. positive numbers that overflow to negative ones are signed integers.

|

||||

|

||||

overflow and underflow bugs are the root of many software issues, ranging from fascinating to dangerous. in the first game in sid meier's civilization series, ghandi had an aggressiveness score of 1, the lowest possible. certain political actions reduced that score by 2, which caused it to underflow and become 255 instead - far beyond the intended maximum - which gave him a very strong tendency to use nuclear weaponry. this bug was so well-known and accidentally hilarious that the company decided to intentionally make ghandi have a strong affinity for nukes in almost all the following games. some arcade games relied on the level number to generate the level, and broke when the number went above what it was expecting. (the reason behind the pac-man "kill screen" is particularly interesting!) for a more serious and worrying example of integer overflow, see this article: <https://en.wikipedia.org/wiki/Year_2038_problem> (unlike Y2K, this one is an actual issue, and has already caused numerous problems)

|

||||

|

||||

- {.wp-image-55}

|

||||

|

||||

- {.wp-image-56}

|

||||

|

||||

the first image is a chart explaining two methods of representing negative numbers with four bits (the one used in this post is on the left). the second is a real-world example of an "overflow".

|

||||

|

||||

thanks so much for reading!

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/@lynnesbian/101919232005592454)

|

||||

|

||||

|

|

@ -0,0 +1,23 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: What's \"defragging\"?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

"defrag" is short for "defragment". when a file is saved to a hard drive, it is physically written to the device by a needle. when you need to read the file, the needle moves along the portion of the disk that contains the data to read it.

|

||||

|

||||

let's say you have three files on a hard drive, on a computer running windows. when you created them, windows automatically put them in order. as soon as the data for file A ends, the data for file B begins. but now you want to make file A bigger. file B is in the way, so you can't just add more to it. your computer can either

|

||||

a) move file A somewhere else on the drive with more free space. this is a slow operation, and may be impossible if you don't have much free space remaining.

|

||||

b) leave a note saying "the rest of the file is over here" and put the new data somewhere else.

|

||||

|

||||

option B is what windows will generally do. this means that over time, as you delete and create and change the size of files on your PC, they end up getting split into lots of small pieces. this is bad for performance, because the hard drive platter and needle need to do a lot more moving around to be able to read the data. if a file is in ten pieces, the needle has to move somewhere else ten times just to read that one file.

|

||||

|

||||

to fix this, you need to defragment your hard drive. when you do this, windows will take some time to scan the hard drive and look for the files that are split up into multiple pieces, and then it'll do its best to ensure that they end up split into as few pieces as possible. it also ensures (to some extent) that related files are next to each other - for example, it might put five files it needs to boot up right next to each other to ensure they can be found and read faster. by default, windows 10 defragments your computer weekly at 3am, but you can do it manually at any time. doing it weekly ensures that things never get too messy. leaving a hard drive's files to get fragmented for several months or even years can cause the defragmentation process to take hours.

|

||||

|

||||

many modern file systems use techniques to avoid fragmentation, but no hard drive based system is truly immune to this issue. this isn't a problem with SSDs, however, as they work completely differently to a hard drive.

|

||||

|

||||

a heavily fragmented file system will work, it'll just be slower, and might wear down the mechanisms of the hard drive a little faster. defragmentation is entirely optional, but a good idea. plus, it's always cool to watch the windows 98 defrag utility doing its thing! :mlem:

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/@lynnesbian/101810116855436290)

|

||||

|

||||

|

|

@ -0,0 +1,33 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: What's Keybase?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

keybase is a website that allows you to prove that a given account or website is owned by you. to explain how this works, we'll need to briefly cover public key cryptography.

|

||||

|

||||

there are many ways to encrypt a file. one such way involves using a password to encrypt the file, which can then be decrypted using the same password. this is known as a symmetrical method, because the way it's encrypted is the same as the way it's decrypted - using a password. the underlying methods of encryption and decryption may be different, but the password remains the same. how these algorithms work is outside the scope of this post - i might make a future post about encryption.

|

||||

|

||||

public key encryption is asymmetrical. this means the way you encrypt it is different from the way you decrypt it. a password protected file can be opened by anyone who knows the password, but a file encrypted using this method can only be decrypted by the person you're sending it to (unless their private key has been stolen). if you encrypt a file using someone's public key, the only way to decrypt it is with their private key. since i'm the only one with access to my private key, i'm the only person who can decrypt any files that are encrypted using my public key.

|

||||

|

||||

my private key can also be used to "sign" a file or message to prove that i said it. anyone can verify that i was the one who signed it by using my public key. comparing the signature to any other public key won't return a match, and changing even one letter of the text will mean that the signature no longer works.

|

||||

|

||||

as the signing process can be used to guarantee that i said something, this means that i can use it to prove that i own, say, a particular facebook account. i could make a post saying "this is lynne" with my signature attached, and anyone could verify it using my public key. this is where keybase comes in.

|

||||

|

||||

the process of signing a post is rather technical, and everyone who wants to verify it will need to know where to get your public key. there are "keyservers" that contain people's public keys, but the average person won't know that, or what the long, jumbled mess of characters at the end of a message even means. keybase does this for you. after you create an account, it generates a public and private key for you to use. you don't even need to access these, it's all managed automatically. you can then verify that you own a given twitter, reddit, mastodon, etc. account by following the steps they provide to you. you just need to make a single post, which keybase will check for, compare against your public key, verify that it's you, and add to your profile. users can also download your public key and verify it themselves.

|

||||

|

||||

support for mastodon was only added recently and isn't quite complete yet, but it's ready to use and works well. this is why you might have noticed a lot of people talking about it recently. support for keybase is new in mastodon 2.8.

|

||||

|

||||

keybase can also be used to prove that you own a given website, again by making a public, signed statement. i've proven that i own lynnesbian.space with a statement here: <https://lynnesbian.space/keybase.txt>

|

||||

|

||||

it also provides a UI to more easily verify someone's signed message, without having to find and download their public key yourself.

|

||||

|

||||

keybase is built on existing and tested standards and technologies, and everything that it does can also be done yourself by hand. it just exists to make this kind of thing more accessible to the general public.

|

||||

|

||||

i've proven my ownership of this mastodon account ([\@lynnesbian](https://fedi.lynnesbian.space/@lynnesbian)), and you can verify that by checking my keybase page: <https://keybase.io/lynnesbian/>

|

||||

|

||||

keybase also offers encrypted chat and file storage, but it's main feature is that you can easily verify and confirm that you are who you say you are. so if you see a website claiming to be owned by me, and you don't see it in my keybase profile, you should be suspicious!

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/@lynnesbian/101939803138033541)

|

||||

|

||||

|

|

@ -0,0 +1,28 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: Why are there so few web browsers?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

a web browser is a program that displays a HTML document, in the same way that a text editor is a program that displays a txt file, or a video player displays MP4s, AVIs, etc. HTML has been around for a while, first appearing around 1990.

|

||||

|

||||

HTML alone is enough to create a website, but almost all sites bring in CSS as well, which allows you to customise the style of a webpage, such as choosing a font or setting a background image¹. javascript (JS) is also used for many sites, allowing for interactivity, such as hiding and showing content or making web games.

|

||||

|

||||

together, HTML, CSS, and JS make up the foundations of the modern web. a browser that aims to be compatible with as many existing websites as possible must therefore implement all three of them effectively. some websites (twitter, ebay) will fall back to a "legacy" mode if javascript support isn't present, while others (mastodon, google maps) won't work at all.

|

||||

|

||||

implementing javascript and making sure it works with HTML is a particularly difficult challenge, which sets the bar for creating a new browser from scratch staggeringly high. this is partly why there are so few browsers that aren't based on firefox or chrome² that are capable of handling these lofty requirements. microsoft has decided to switch edge over to a chrome-based project, opera switched to the chrome engine years ago, and so on, because of the difficulty of keeping up. that's not to say there aren't any browsers that aren't based on firefox or chrome - safari isn't based on either of them³ - but the list is certainly sparse.

|

||||

|

||||

due to the difficulty of creating and maintaining a web browser, and keeping up with the ever evolving standards, bugs and oddities appear quite frequently. they often manifest in weird and obscure cases, and occasionally get reported on if they're major enough. an old version internet explorer rather famously had a bug that caused it to render certain elements of websites completely incorrectly. by the time it was fixed, some websites were already relying on it. microsoft decided to implement a "quirks mode" feature that, when enabled, would simulate the old, buggy behaviour in order to get the websites to work right. most modern browsers also implement a similar feature. this just adds yet another layer of difficulty to creating a browser.

|

||||

|

||||

the complexity of creating a web browser combined with the pre-existing market share domination of google chrome makes creating a new web browser difficult. this has had the effect of further consolidating chrome's market share. chrome is currently sitting about about 71.5% market share⁴, with opera (which is based on chrome) adding a further 2.4%. this means that google has a lot of say over the direction the web is headed in. google can create and implement new ways of doing things and force others to either adopt or disappear. monopolies are never a good thing, especially not over something as fundamental and universal as a web browser. at its peak, internet explorer had over 90% market share. some outdated websites still require internet explorer, which is one of the main reasons why windows 10 still includes it, despite also having edge.

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/@lynnesbian/101817020284110778)

|

||||

|

||||

footnotes:

|

||||

|

||||

1. before CSS, styling was done directly through HTML itself. while this way of doing things is considered outdated and deprecated, both firefox and chrome still support it for legacy compatibility. having to support deprecated standards is yet another hurdle in creating a browser.

|

||||

2. chrome is the non-[free (as in freedom)](https://bune.city/2019/05/lynne-teaches-tech-what-is-free-software-why-do-people-say-software-isnt-free-even-though-you-can-download-it-for-free-from-the-app-store/) version of the open source browser "chromium", also created by google. compared to chromium, chrome adds some proprietary features like adobe flash and MP3 playback.

|

||||

3. the engine safari uses is called webkit. chrome used to use this engine, but switched to a new engine based on webkit called blink. safari therefore shares at least some code with chrome.

|

||||

4. based on <http://gs.statcounter.com/browser-market-share/desktop/worldwide>. note that it's impossible to perfectly measure browser market share, but this is good for getting an estimate.

|

||||

|

||||

|

|

@ -0,0 +1,19 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: Why do people say GNU/Linux or GNU+Linux?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

paraphrased Wikipedia quote:

|

||||

In 1991, the Linux kernel appeared. Combined with the operating system utilities developed by the GNU project, it allowed for the first operating system that was free software, commonly known as Linux.

|

||||

|

||||

in other words, the linux kernel and GNU utilities work together to form the linux operating system. this is why people like to say "GNU/Linux" or "GNU+Linux", to recognise the work made by the GNU projects.

|

||||

|

||||

however, it is possible to make a linux-based operating system based on the linux kernel. notable examples of this include alpine and android (yes, the phone operating system). this means that when you say "GNU/Linux", you aren't actually addressing all linux-based operating systems. android is not GNU/linux because it does not contain GNU software, yet it's still a linux-based operating system.

|

||||

|

||||

non-GNU linux operating systems are rare, but they do exist. personally, i prefer to say "linux" rather than "GNU/linux" to acknowledge the existence and (more importantly) the viability of projects such as alpine, but there are people who like to recognise GNU's contributions to the mainstream linux operating system base. both are correct. the maintainers of debian call it a "GNU/Linux distribution", while arch bills itself as a "linux distribution".

|

||||

|

||||

[view original post

|

||||

](https://fedi.lynnesbian.space/web/statuses/101426988587606255)

|

||||

|

||||

|

|

@ -0,0 +1,22 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: Why does text on a webpage stay sharp when you zoom in, even though images get blurry?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

images like PNG and JPEG files get blurry when zoomed in beyond 100% of their size. this is true of video files, too, and many other methods of representing graphics. this is because these files contain an exact description of what to show. they tell the computer what colour each point on the image is, but they only list a certain number of points (or picture elements - pixels!). if a photo is 800x600, it's 800 pixels wide, and 600 pixels tall. if you ask the computer to show it any bigger than that, it has to guess what's between those pixels. it doesn't know what the image contains - to a computer, a photo of the sky is just a bunch of blue pixels with some patches of white thrown in. there are many algorithms that a computer can use to fill in those blanks, but in the end, it's just an estimation. it won't be able to show you any more detail than the regular version could.

|

||||

|

||||

a font, however, is different. almost all fonts on a modern computer are described in a vector format. rather than saying "this pixel is black, this one is white", they say "draw a line from here to here". a list of instructions can be done at any size. if you ask a computer to show an image of a triangle, it'll get blurry when you zoom in. but if you teach it how to draw a triangle, and then tell it to make it bigger, it can "zoom in" forever without getting blurry. there's no pixels or resolution to worry about.

|

||||

|

||||

vectors can also be used for images, such as the SVG format. here's an example of one on wikipedia: <https://upload.wikimedia.org/wikipedia/commons/0/02/SVG_logo.svg>

|

||||

even though it's an image, you can zoom in without it ever getting blurry!

|

||||

|

||||

even though you can resize an SVG to your heart's content, it'll never reveal more detail that what it was created with. so you can't "zoom and enhance" a vector image either.

|

||||

|

||||

there are always exceptions to the rule. not all fonts use vector graphics - some use bitmap graphics, and they get blurry like PNGs and JPEGs do too.

|

||||

|

||||

so if vector graphics don't get blurry, why don't we use them for photos? to put it simply, making a vector image is hard. you need to describe every stroke and shape and colour that goes in to replicating the drawing. this gets out of hand very quickly when you want to save images of complex scenery (or even just faces). cameras simply can't do this on the fly, and even if they could, the resultant file would be an enormous mess of assumptions and imperfections. the current method of doing things is out best option.

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/@lynnesbian/101942900073557509)

|

||||

|

||||

|

|

@ -0,0 +1,23 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: why don't windows programs work on a mac, and vice versa?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

an operating system (OS), such as windows or macOS, handles a lot of low-level stuff. this means that developers don't have to worry about details like "how to scroll a page" or "how to read text from a file", because the OS handles it for you. a computer can't do anything without an OS - it's needed for even the most basic tasks.

|

||||

|

||||

the OS provides you with a huge amount of functions you can call to get stuff done. rather than worrying about the fundamentals of reading a file from a hard drive, the OS will provide you with a function that does the job for you. however, every OS does this differently. this means that you can't just run windows program on macOS because the functions it needs aren't there.

|

||||

|

||||

[wine](https://www.winehq.org/) is a program that translates windows functions into ones that work with macOS or linux. this allows you to actually run windows programs on macOS. when the program asks for a windows function, wine performs the macOS equivalent and pretends it's running on windows. the macOS version of the sims 3 actually runs in a modified version of wine - it's the exact same as the windows version, just with a wine "wrapper" around it.

|

||||

|

||||

it's possible to make programs that work on windows, macOS, and linux. for example, games made with the engine "unity" can run on all three of these. however, the actual game file is still different for all three - unity just translates the code into versions that work \*individually\* with windows, macOS, or linux. this means that you can't run a windows version of a unity game on macOS.

|

||||

|

||||

a java program can run fine on all three of the above operating systems, but you actually have to install java first - and the installer is specific to your OS. it's not possible to make a program that truly works with all three of these operating systems from a single file with no installers or engines or other behind the scenes work, as the differences are simply too great.

|

||||

|

||||

one more interesting note: [reactOS](https://www.reactos.org/) is an operating system based on wine technology. its goal is to completely simulate windows without actually using any windows code (as this is illegal) by using the same methods wine does. it's still in alpha, but it's really cool!

|

||||

|

||||

(i know there are other operating systems, i just didn't mention them for brevity's sake. sorry \*BSD people.)

|

||||

|

||||

[view original post](https://fedi.lynnesbian.space/web/statuses/101786030032344976)

|

||||

|

||||

33

_posts/lynne-teaches-tech/2019-05-05-lynne-teaches-tech.md

Normal file

33

_posts/lynne-teaches-tech/2019-05-05-lynne-teaches-tech.md

Normal file

|

|

@ -0,0 +1,33 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: Why does compressing a JPEG make it look worse, even though putting in a ZIP file makes it look the same?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech, Originally from the Fediverse]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

there are many different methods of file compression. one of the simplest methods is run length encoding (RLE). the idea is simple: say you have a file like this:

|

||||

`aaabbbbaaaaa`

|

||||

you could store it as:

|

||||

`a3b4a5`

|

||||

to represent that there are 3 a's, 4 b's, etc. you would then simply need a program to reverse the process - decompression. this is seldom used, however, as it had a fairly major flaw:

|

||||

`abcde`

|

||||

becomes

|

||||

`a1b1c1d1e1`

|

||||

which is twice as large! RLE compression is best used for data with lots of repetition, and will generally make more random files \*larger\* rather than smaller.

|

||||

|

||||

a more complex method is to make a "dictionary" of things contained in the file and use that to make things smaller. for example, you could replace all occurrences of "the united states of america" with "🇺🇸", and then state that "🇺🇸" refers to "the united states of america" in the dictionary. this would allow you to save a (relatively) huge amount of space if the full phrase appears dozens of times.

|

||||

|

||||

what i've been talking about so far are lossless compression methods. the file is exactly the same after being compressed and decompressed. lossy compression, on the other hand, allows for some data loss. this would be unacceptable in, for example, a computer program or a text file, because you can't just remove a chunk of text or code and approximate what used to be there. you can, however, do this with an image. this is how JPEGs work. the compression is lossy, which means that some data is removed. this is relatively imperceptible at higher quality settings, but becomes more obvious the more you sacrifice quality for size. PNG files are (almost always) lossless, however. your phone camera takes photos in JPEG instead of PNG, though, because even though some quality is lost, a photo stored as a PNG would be much, much larger.

|

||||

|

||||

some examples of file formats that typically use lossy compression are JPEG, MP4, MP3, OGG, and AVI. some examples of lossless compression formats are FLAC, PNG, ZIP, RAR, and ALAC. some examples of lossless, uncompressed files are WAV, TXT, JS, BMP, and TAR. in terms of file size, you'll always find that lossy files are smaller than the lossless files they were created from (unless it's an horrendously inefficient compression format), and that losslessly compressed files are smaller than uncompressed ones.

|

||||

|

||||

you'll find that putting a long text file in a zip makes it much smaller, but putting an MP3 in a zip has a much less major effect. this is because MP3 files are already compressed quite efficiently, and there's not really much that a lossless algorithm can do.

|

||||

|

||||

there are benefits to all three types of formats. lossily compressed files are much smaller, losslessly compressed files are perfectly true to the original sound/image/etc while being much smaller, and uncompressed data is very easy for computers to work with, as they don't have to apply any decompression or compression algorithms to it. this is (partly) why BMP and WAV files still exist, despite PNG and FLAC being much more efficient.

|

||||

|

||||

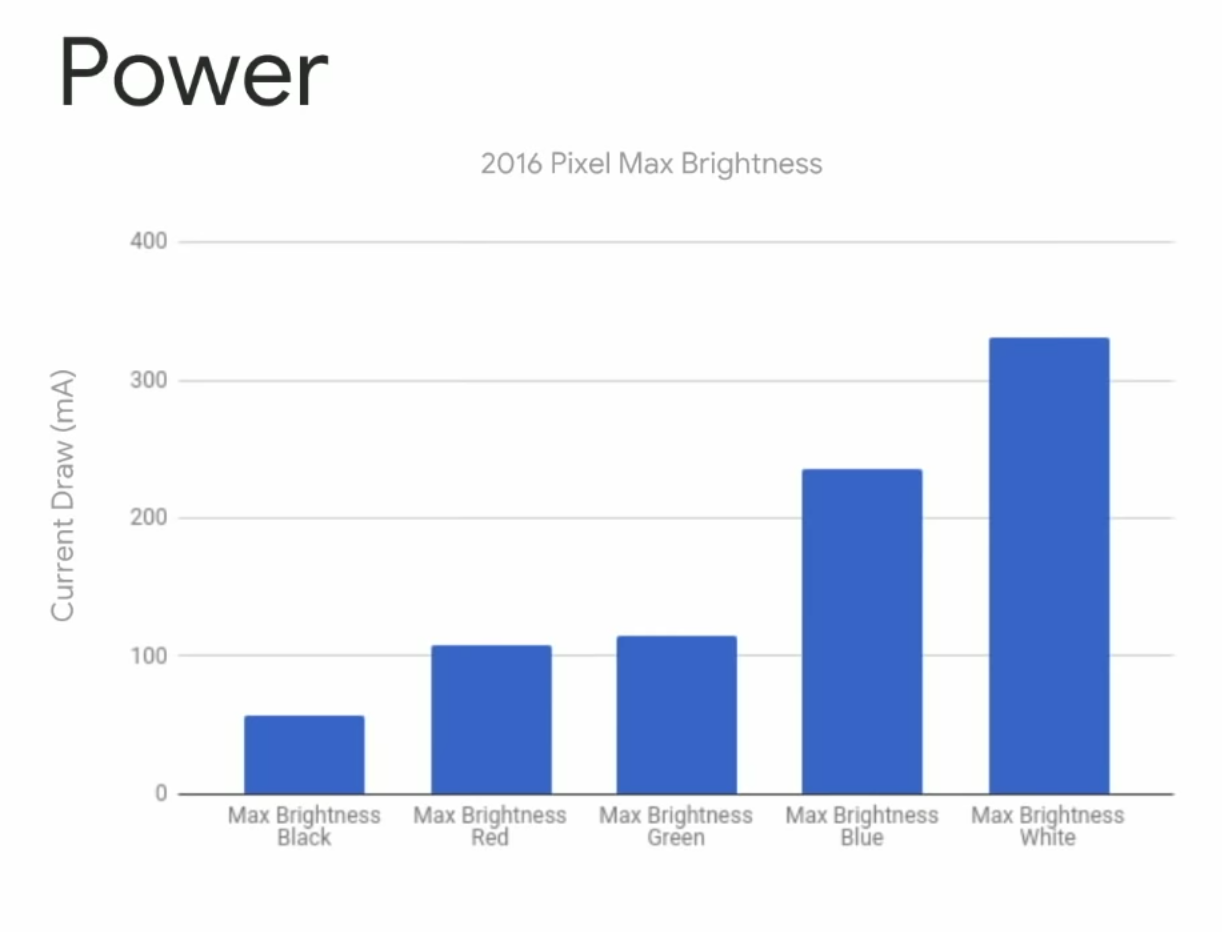

as an example of how dramatic these differences often are, i looked at the file sizes for the downloadable version of master boot record's album "internet protocol" in three formats: WAV, FLAC, and MP3.

|

||||

|

||||

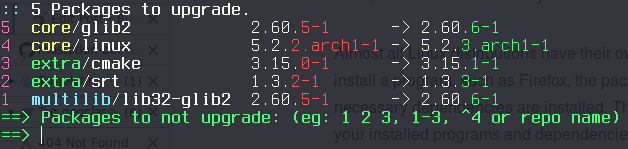

{.wp-image-45}

|

||||

|

||||

you can see that the file size (shown in megabytes) is nearly 90 megabytes smaller with the FLAC version, and the MP3 version is only \~13% of the size of the WAV version. note that these downloads are in ZIP format - the WAV files would be even larger than shown here. this is not representative of all compression algorithms, nor is it representative of all music - this is just an illustrative example. TV static in particular compresses very poorly, because it's so random, which makes it hard for algorithms to find patterns. watch a youtube video of TV static to see this in effect - you'll notice obvious "block" shapes and blurriness that shouldn't be there as the algorithm struggles to do its job. the compression on youtube is particularly strong to ensure that the servers can keep up with the enormous demand, but not so much so that videos become blurry, unwatchable messes.

|

||||

|

||||

|

|

@ -0,0 +1,22 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: Why did everyone's Firefox add-ons get disabled around May 4th?"

|

||||

author: Lynne

|

||||

categories: [Lynne Teaches Tech]

|

||||

tags: [Lynne Teaches Tech]

|

||||

---

|

||||

|

||||

Mozilla, the company behind Firefox, have implemented a number of security checks in their browser related to extensions. One such check is a digital certificate that all add-ons must be signed with. This certificate is like a [HTTPS certificate](https://bune.city/2019/05/lynne-teaches-tech-what-does-https-mean/) - the thing that gives you a green padlock in your browser's URL bar.

|

||||

|

||||

You've probably seen a HTTPS error before. This happens when a site's certificate is invalid for one reason or another. One such reason is that the certificate has expired.

|

||||

|

||||

<!--more-->

|

||||

HTTPS certificates are only valid for a certain amount of time. When that time runs out, they need to be renewed. This is done to ensure that the person with the certificate is still running the website, and is still interested in keeping the certificate.

|

||||

|

||||

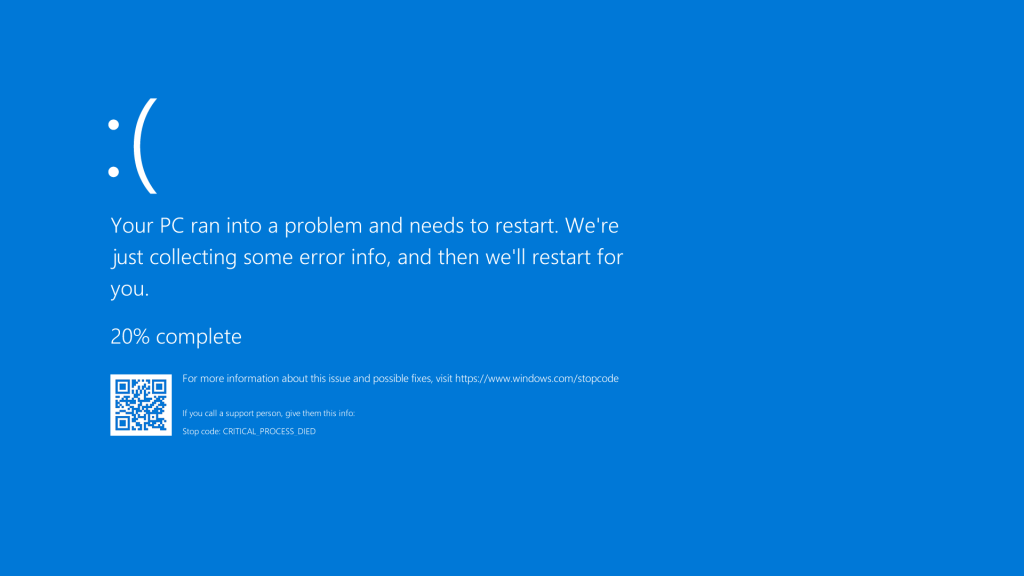

{.wp-image-142}

|

||||

|

||||

When a certificate expires, your browser will refuse to connect to the website. A similar issue happened with Firefox - their own add-on signing certificate expired on the 4th of May, 00:09 UTC, [causing everyone's add-ons to be disabled](https://support.mozilla.org/en-US/kb/add-ons-disabled-or-fail-to-install-firefox) after that timed passed.

|

||||

|

||||

One would think that Firefox wouldn't disable an add-on that had been signed with a certificate while it was still valid, but apparently they didn't do that. Even so, this could have been avoided if anybody had remembered to renew the certificate, which nobody did. This is a particularly embarrassing issue for Firefox, especially considering both how easily it could have been avoided and the fact that it really shouldn't have been possible for this to happen in the first place. It also raises the question: What happens if Mozilla disappears, and people keep using Firefox? Thankfully, there are ways to disable extension signing, which means that you can protect yourself from ever happening again, but note that **doing this is a minor security risk**.

|

||||

|

||||

One could argue that by remotely disabling some of the functionality of your browser, intentionally or not, Mozilla is violating the [four essential freedoms](https://fsfe.org/freesoftware/basics/4freedoms.en.html), specifically, the right to unlimited use for any purpose.

|

||||

|

||||

|

|

@ -0,0 +1,64 @@

|

|||

---

|

||||

title: "Lynne Teaches Tech: What are all the different types and versions of USB about?"

|

||||

author: Lynne

|

||||